|

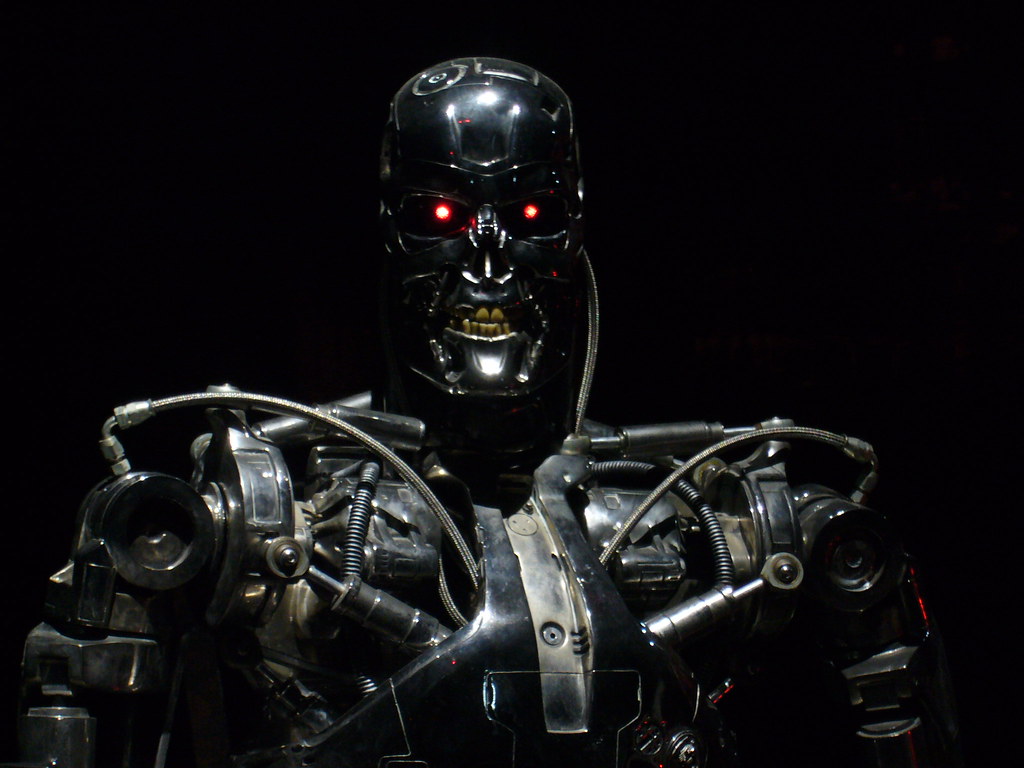

| Image: Flickr User - Dick Thomas Johnson |

By Franz-Stefan Gady

The problem with applying euphemisms in policy discussions: they circumvent the truth.

As I have argued previously (“The One Thing Geeky Defense Analysts Never Talk About”), when we discuss defense policies we often leave out the most salient aspect of the conversation: new tactics, new strategies, and new military technologies have the singular purpose of more effectively threatening or killing other human beings.

Last week, I covered the story (“Is a Killer Robot Arms Race Inevitable?”) of an open letter signed by 1,000 artificial intelligence and robotics researchers, including Apple co-founder Steve Wozniak, Google DeepMind chief executive Demis Hassabis, and Professor Stephen Hawking, that calls for a ban of “offensive autonomous weapons beyond meaningful human control.”

The petition, while noble, will be difficult to live up to given the dialectical nature of military competition. However, what is immediately more striking is the euphemism-filled debate on the subject we are having (or rather not having). Current discussions focus on technical details and legal aspects surrounding the deployment of killer robots. For example, Kelley Sayler in a piece for Defense One makes the following argument:

On the contrary, autonomous weapons could shape a better world — one in which the fog of war thins, if ever so slightly. These systems are not subject to the fatigue, combat stress, and other factors that occasionally cloud human judgment. And if responsibly developed and properly constrained, they could ensure that missions are executed strictly according to the rules of engagement and the commander’s intent. A world with autonomous weapons could be one where the principles of international humanitarian law (IHL) are not only respected but also strengthened.

But this does not compute. For starters: killer robots will not and cannot strengthen international humanitarian law. Why? Because – nomen est omen – killer robots (like remotely-controlled weapon systems) will make the killing of other human beings easier and consequently increase the likelihood of “superfluous injury or unnecessary suffering” during war.

Read the full story at The Diplomat